Coriolis Effect Demonstration (with Drones)

We demonstrate how rotating reference frames give rise to the Coriolis effect and centrifugal acceleration. In this video, we approach this as a simple physics demonstration and examine...

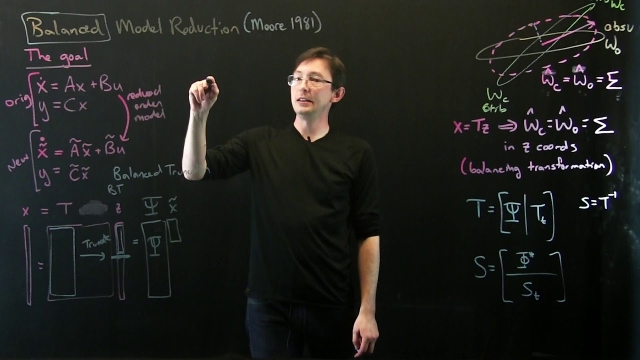

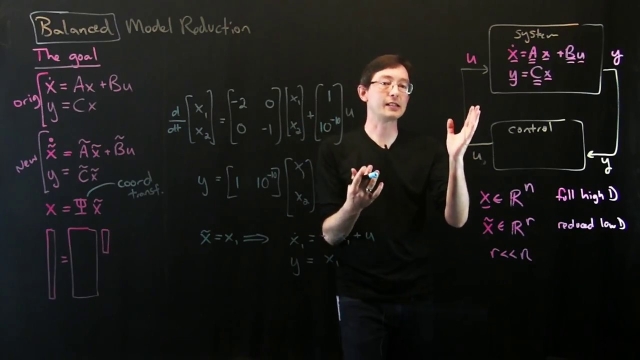

See MoreData-Driven Control: Balancing Transformation

In this lecture, we derive the balancing coordinate transformation that makes the controllability and observability Gramians equal and diagonal. This is the critical step in balanced model...

See MoreParseval's Theorem

Parseval's theorem is an important result in Fourier analysis that can be used to put guarantees on the accuracy of signal approximation in the Fourier domain.

See MoreStanford CS234: Reinforcement Learning | Winter 2019 | Lecture 7 - Imitation...

Professor Emma Brunskill

Assistant Professor, Computer Science

Stanford AI for Human Impact Lab

Stanford Artificial Intelligence Lab

Statistical Machine Learning Group

See MoreTrimming a Model of a Dynamic System Using Numerical Optimization

In this video we show how to find a trim point of a dynamic system using numerical optimization techniques. We generate a cost function that corresponds to a straight and level flight...

See MoreData-Driven Control: Eigensystem Realization Algorithm Procedure

In this lecture, we describe the eigensystem realization algorithm (ERA) in detail, including step-by-step algorithmic instructions.

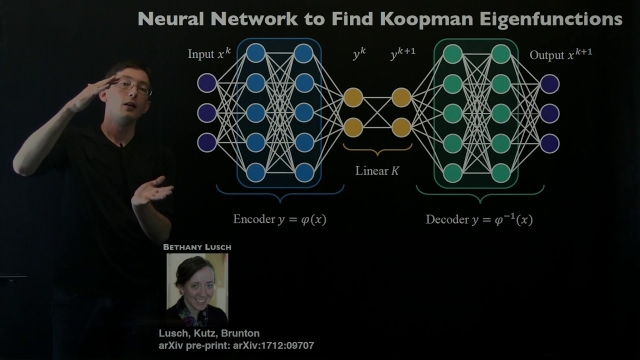

See MoreKoopman Spectral Analysis (Representations)

In this video, we explore how to obtain finite-dimensional representations of the Koopman operator from data, using regression.

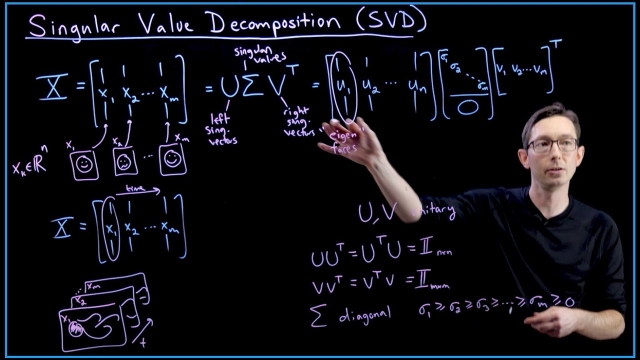

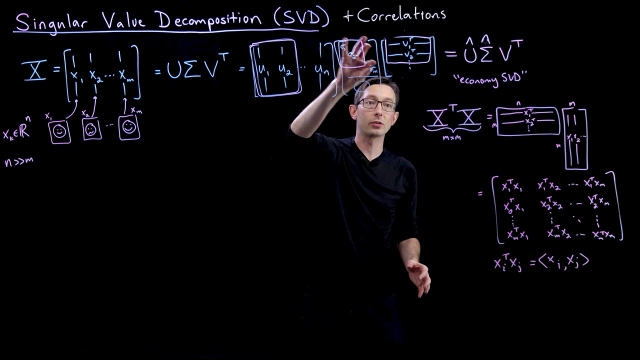

See MoreSingular Value Decomposition (SVD): Mathematical Overview

This video presents a mathematical overview of the singular value decomposition (SVD).

See MoreStanford CS234: Reinforcement Learning | Winter 2019 | Lecture 5 - Value Fun...

Professor Emma Brunskill

Assistant Professor, Computer Science

Stanford AI for Human Impact Lab

Stanford Artificial Intelligence Lab

Statistical Machine Learning Group

See MoreRL Course by David Silver - Lecture 3: Planning by Dynamic Programming

Introduces policy evaluation and iteration, value iteration, extensions to dynamic programming and contraction mapping.

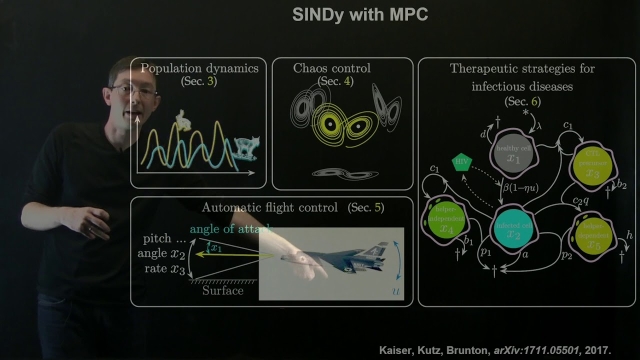

See MoreSparse Identification of Nonlinear Dynamics for Model Predictive Control

This lecture shows how to use sparse identification of nonlinear dynamics with control (SINDYc) with model predictive control to control nonlinear systems purely from data.

See MoreStanford CS234: Reinforcement Learning | Winter 2019 | Lecture 6 - CNNs and ...

Professor Emma Brunskill

Assistant Professor, Computer Science

Stanford AI for Human Impact Lab

Stanford Artificial Intelligence Lab

Statistical Machine Learning Group

RL Course by David Silver - Lecture 8: Integrating Learning and Planning

Introduces model-based RL, along with integrated architectures and simulation based search.

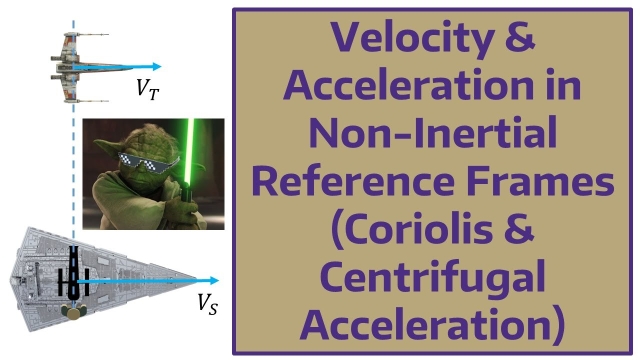

See MoreVelocity & Acceleration in Non-Inertial Reference Frames (Coriolis &...

In this video we derive a mathematical description of velocity and acceleration in non-inertial reference frame. We examine the effect of fictitious forces that are witnessed by observers on...

See MoreData-Driven Control: Balanced Truncation

In this lecture, we describe the balanced truncation procedure for model reduction, where a handful of the most controllable and observable state directions are kept for the reduced-order...

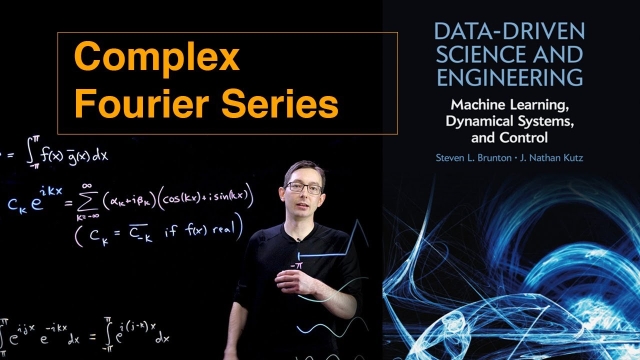

See MoreComplex Fourier Series

This video will describe how the Fourier Series can be written efficiently in complex variables.

See MoreStanford CS234: Reinforcement Learning | Winter 2019 | Lecture 11 - Fast Rei...

Professor Emma Brunskill

Assistant Professor, Computer Science

Stanford AI for Human Impact Lab

Stanford Artificial Intelligence Lab

Statistical Machine Learning Group

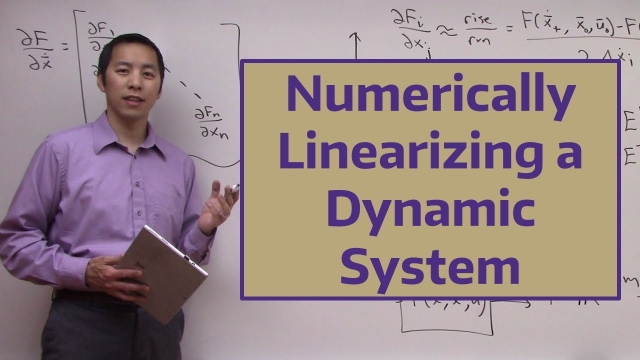

Numerically Linearizing a Dynamic System

In this video we show how to linearize a dynamic system using numerical techniques. In other words, the linearization process does not require an analytical description of the system. This...

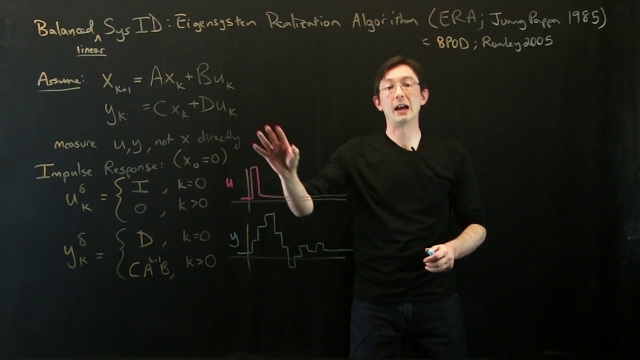

See MoreData-Driven Control: Eigensystem Realization Algorithm

In this lecture, we introduce the eigensystem realization algorithm (ERA), which is a purely data-driven algorithm to obtain balanced input—output models from impulse response data. ERA was...

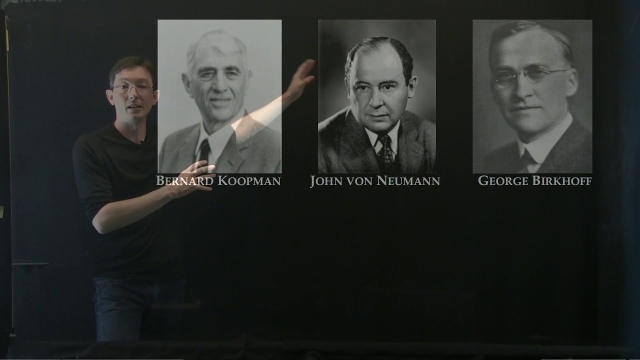

See MoreKoopman Spectral Analysis (Overview)

In this video, we introduce Koopman operator theory for dynamical systems. The Koopman operator was introduced in 1931, but has experienced renewed interest recently because of the...

See MoreStanford CS234: Reinforcement Learning | Winter 2019 | Lecture 8 - Policy Gr...

Professor Emma Brunskill

Assistant Professor, Computer Science

Stanford AI for Human Impact Lab

Stanford Artificial Intelligence Lab

Statistical Machine Learning Group

RL Course by David Silver - Lecture 2: Markov Decision Process

Explores Markov Processes including reward processes, decision processes and extensions.

See MoreTeaching resources for a reinforcement learning course

Teaching resources by Dimitri P. Bertsekas for reinforcement learning courses. The website has links for freely available textbooks (for instructional purposes), videolectures, and course...

See MoreData-Driven Control: The Goal of Balanced Model Reduction

In this lecture, we discuss the overarching goal of balanced model reduction: Identifying key states that are most jointly controllable and observable, to capture the most input—output...

See MoreSingular Value Decomposition (SVD): Dominant Correlations

This lectures discusses how the SVD captures dominant correlations in a matrix of data.

See More